首先我们看公式:

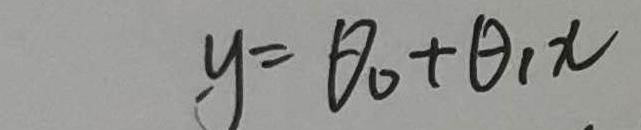

这个是要拟合的函数

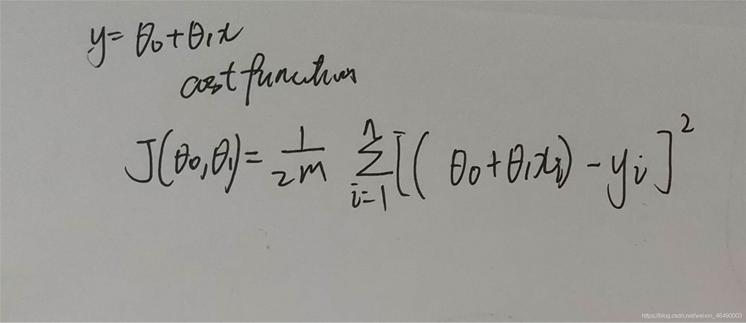

然后我们求出它的损失函数, 注意:这里的n和m均为数据集的长度,写的时候忘了

注意,前面的theta0-theta1x是实际值,后面的y是期望值

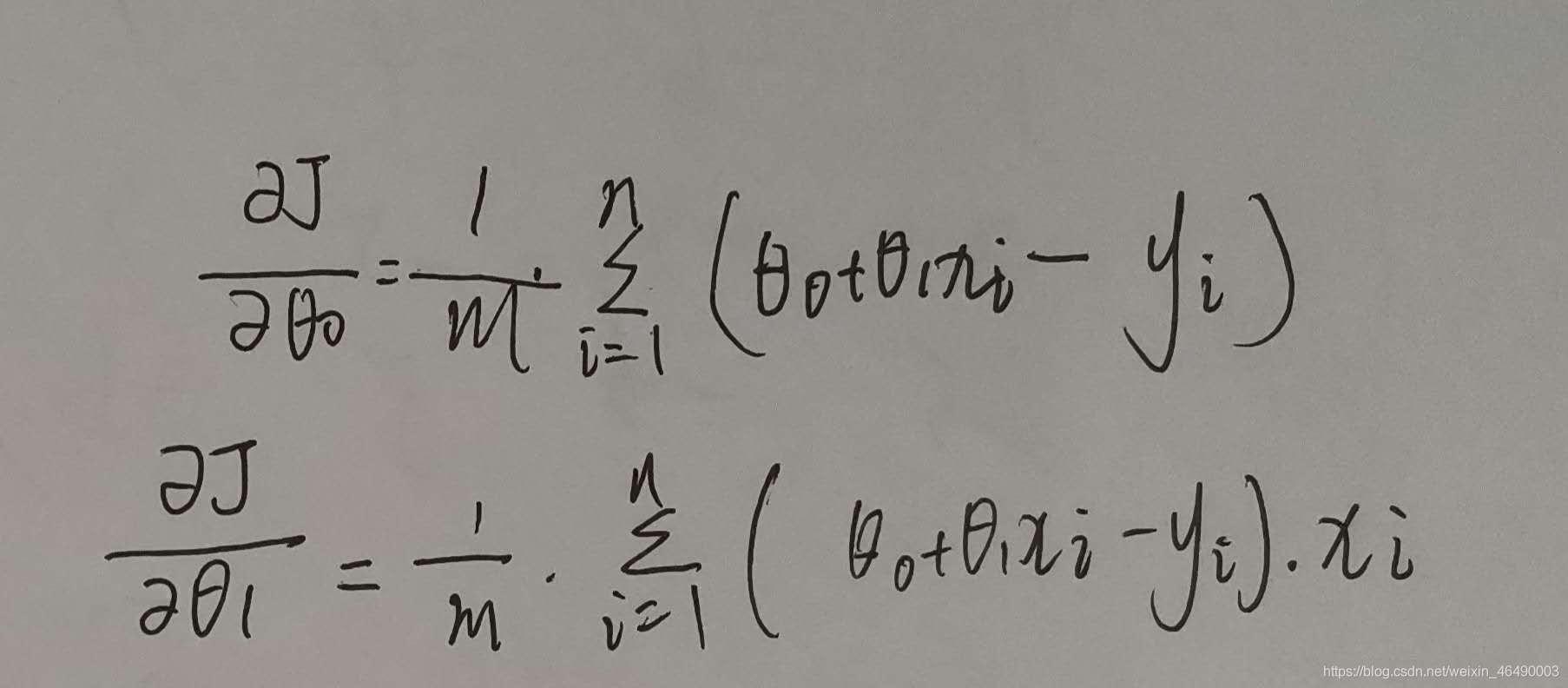

接着我们求出损失函数的偏导数:

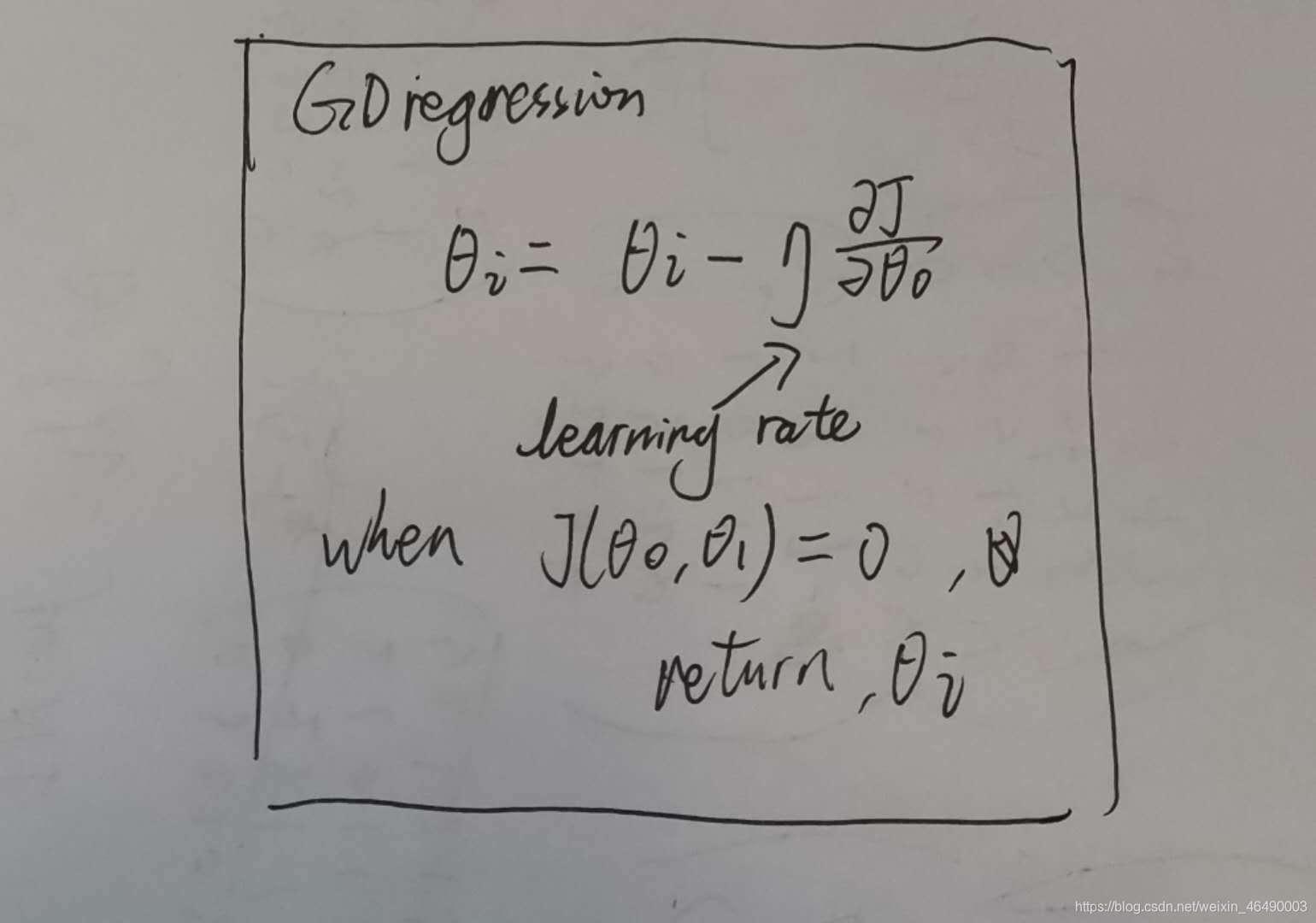

最终,梯度下降的算法:

学习率一般小于1,当损失函数是0时,我们输出theta0和theta1.

接下来上代码!class LinearRegression(): def __init__(self, data, theta0, theta1, learning_rate): self.data = data self.theta0 = theta0 self.theta1 = theta1 self.learning_rate = learning_rate self.length = len(data) # hypothesis def h_theta(self, x): return self.theta0 + self.theta1 * x # cost function def J(self): temp = 0 for i in range(self.length): temp += pow(self.h_theta(self.data[i][0]) - self.data[i][1], 2) return 1 / (2 * self.m) * temp # partial derivative def pd_theta0_J(self): temp = 0 for i in range(self.length): temp += self.h_theta(self.data[i][0]) - self.data[i][1] return 1 / self.m * temp def pd_theta1_J(self): temp = 0 for i in range(self.length): temp += (self.h_theta(data[i][0]) - self.data[i][1]) * self.data[i][0] return 1 / self.m * temp # gradient descent def gd(self): min_cost = 0.00001 round = 1 max_round = 10000 while min_cost < abs(self.J()) and round <= max_round: self.theta0 = self.theta0 - self.learning_rate * self.pd_theta0_J() self.theta1 = self.theta1 - self.learning_rate * self.pd_theta1_J() print('round', round, ':\t theta0=%.16f' % self.theta0, '\t theta1=%.16f' % self.theta1) round += 1 return self.theta0, self.theta1 def main(): data = [[1, 2], [2, 5], [4, 8], [5, 9], [8, 15]] # 这里换成你想拟合的数[x, y] # plot scatter x = [] y = [] for i in range(len(data)): x.append(data[i][0]) y.append(data[i][1]) plt.scatter(x, y) # gradient descent linear_regression = LinearRegression(data, theta0, theta1, learning_rate) theta0, theta1 = linear_regression.gd() # plot returned linear x = np.arange(0, 10, 0.01) y = theta0 + theta1 * x plt.plot(x, y) plt.show()到此这篇关于python 还原梯度下降算法实现一维线性回归 的文章就介绍到这了,更多相关python 一维线性回归 内容请搜索python博客以前的文章或继续浏览下面的相关文章希望大家以后多多支持python博客!

-

<< 上一篇 下一篇 >>

python 还原梯度下降算法实现一维线性回归

看: 1091次 时间:2020-12-09 分类 : python教程

- 相关文章

- 2021-12-20Python 实现图片色彩转换案例

- 2021-12-20python初学定义函数

- 2021-12-20图文详解Python如何导入自己编写的py文件

- 2021-12-20python二分法查找实例代码

- 2021-12-20Pyinstaller打包工具的使用以及避坑

- 2021-12-20Facebook开源一站式服务python时序利器Kats详解

- 2021-12-20pyCaret效率倍增开源低代码的python机器学习工具

- 2021-12-20python机器学习使数据更鲜活的可视化工具Pandas_Alive

- 2021-12-20python读写文件with open的介绍

- 2021-12-20Python生成任意波形并存为txt的实现

-

搜索

-

-

推荐资源

-

Powered By python教程网 鲁ICP备18013710号

python博客 - 小白学python最友好的网站!